OpenCap

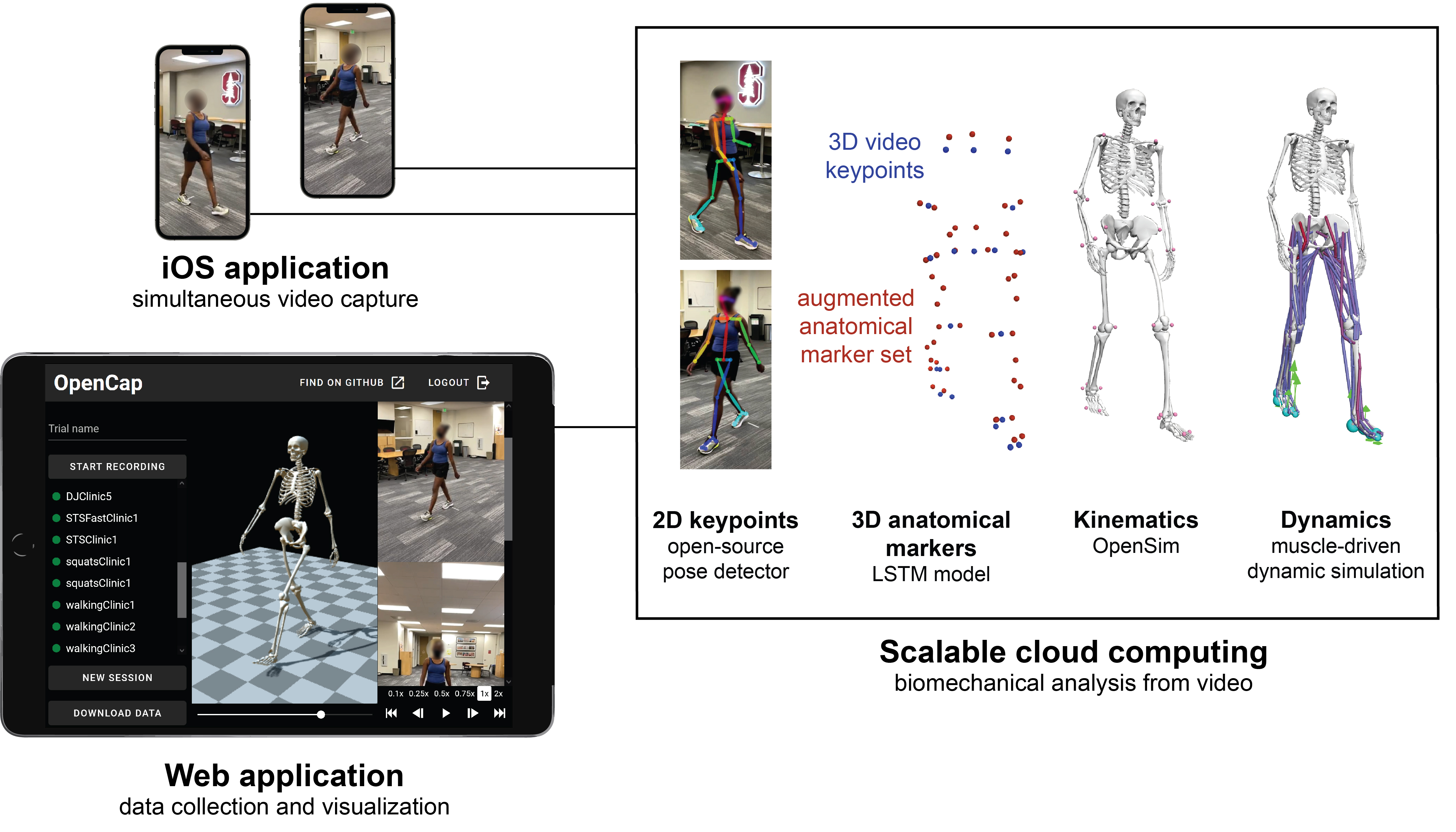

OpenCap is a software package to quantify human movement from smartphone videos. OpenCap combines computer vision, deep learning, and biomechanical modeling and simulation to estimate the kinematics (ie, motion) and kinetics (ie, musculoskeletal forces) of movement from two or more videos. OpenCap comes with an iOS application, a web application, and is deployed in the cloud. To get started, visit opencap.ai.

OpenCap is open-source, under Apache 2.0 license, and available on GitHub. The backend can be found in this repository. OpenCap uses a deep learning model to predict anatomical markers from sparse video keypoints. The model was trained using this code. Finally, to estimate kinetics (ie, musculoskeletal forces), OpenCap relies on muscle-driven musculoskeletal simulations. You can find example of how to generate such simulations in this repository. More details in the preprint of our paper.